Prefect vs Airflow – How are they different and how to select

Apache Airflow and Prefect are both data orchestration tools, but both are unique and serves different audiences. Our senior data engineer, Kannabiran, who has worked with both tools, has shared useful insights about Prefect vs Airflow. The blog highlights their benefits, limitations, comparisons, and how to select one.

Kannabiran

June 25, 2025 |

8 mins

Apache Airflow and Prefect – What is it

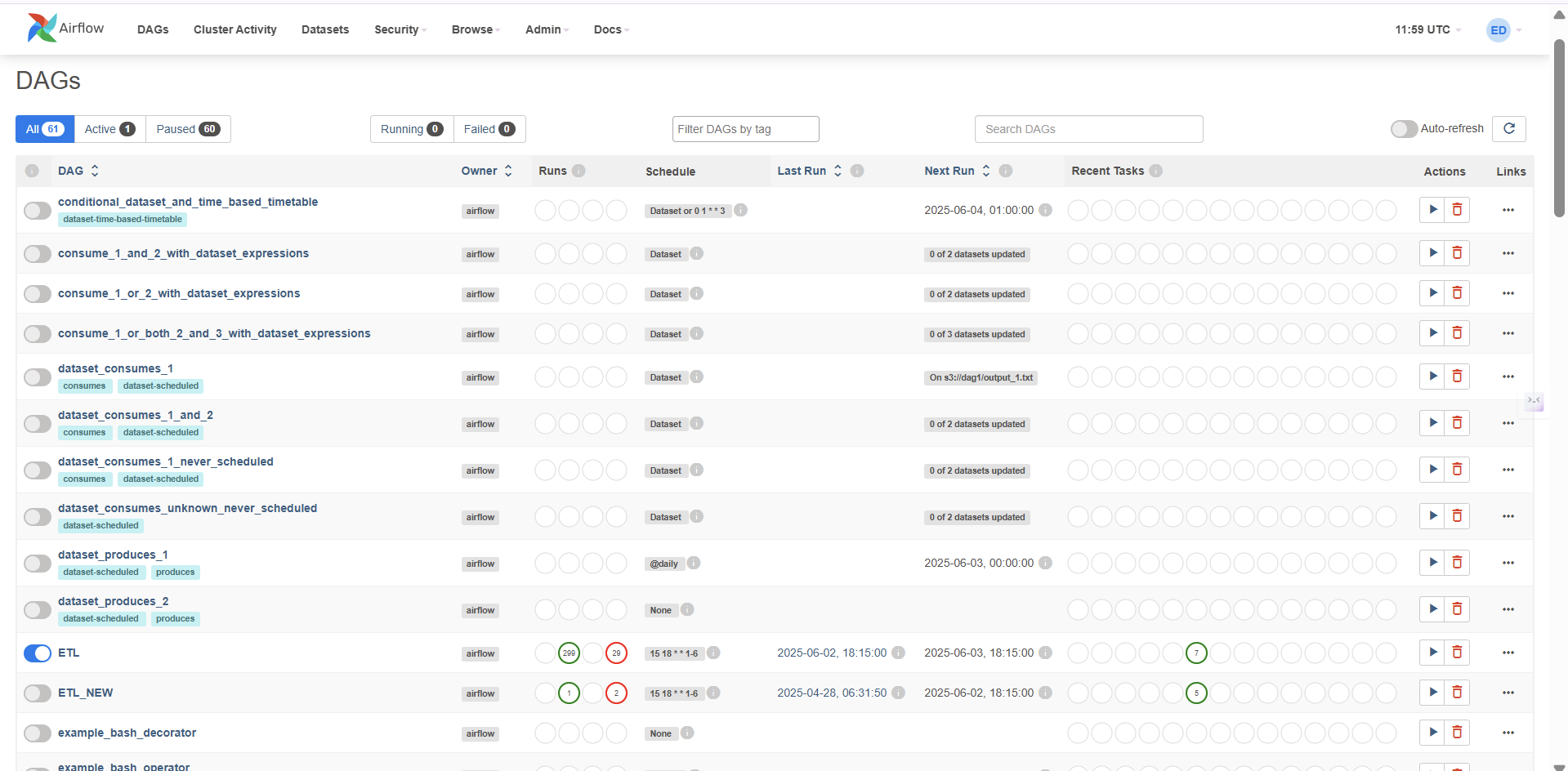

Apache Airflow, developed first by Airbnb and later became a part of the Apache Foundation, is the go-to tool for defining workflows as Directed Acyclic Graphs (DAGs) using Python scripts. With rich features like time-based scheduling and rich UI, this is a dependable choice for many organizations.

Prefect uses a more modernistic & hybrid architecture that supports both time-based and event-driven workflow execution. With Prefect, users can build workflows using Python functions decorated with @flow and @task, making it more intuitive and dynamic. So, organizations seeking greater flexibility and modern infrastructure can go for this.

How does it work?

Airflow

Airflow is great at automating complex, repeatable tasks, following rules strictly, and making sure nothing falls through the cracks.

Components of Airflow

Components | Description |

|---|---|

Scheduler | Reads DAG definitions and triggers task execution. |

Executor | Runs tasks through backends like Celery, Kubernetes, etc. |

Web server | UI to monitor DAGs, logs, task status, and more. |

Metadata database | Stores DAG runs, logs, configurations, etc. |

Here is how Airflow works.

You define tasks like checklists, defining each task that happens consequently. Example:

Step 1: Pull sales data → Step 2: Clean it → Step 3: Merge with CRM data → Step 4: Email dashboard

Airflow organizes these tasks in a flow, popularly called as DAG.

Scheduler scans the DAG at regular intervals and triggers them based on the schedule.

(schedule_interval) in DAG code defines the intervals at which the task must be executed.

For an existing DAG, it is easy to do manual and ad-hoc runs through the tool UI or CLI.

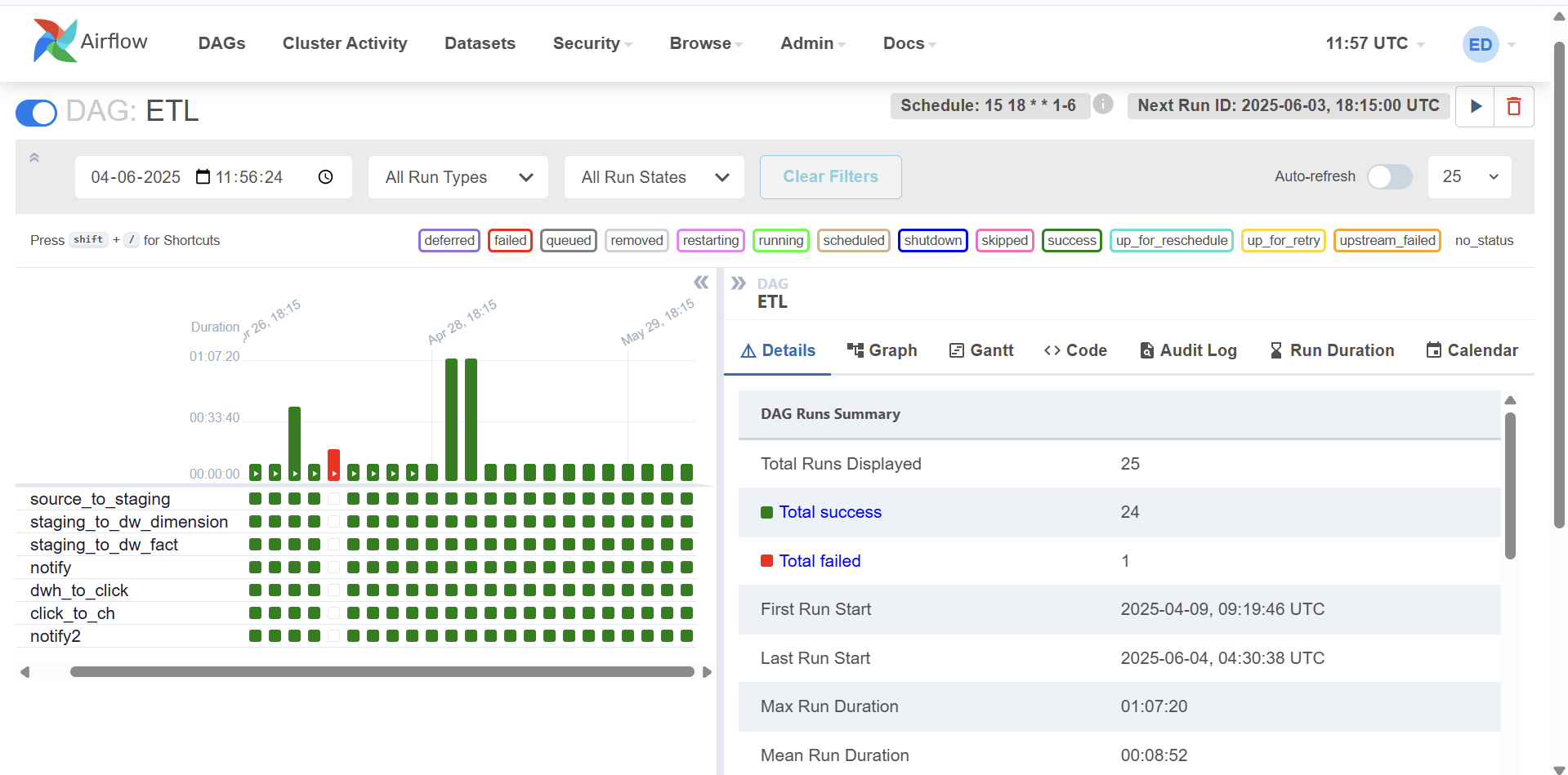

There's a behind-the-scenes database tracking which tasks ran, what succeeded or failed, duration, etc.

Airflow is great. But we found great deal of difficulties when we had to run ad-hoc scheduling. All we wanted it to do was ‘run this workflow just once, tomorrow at 3PM? But we had to go back into the code, make a change, and deploy again. It’s not great for ad-hoc, one-time things unless already planned. - One of our clients during a discovery call

Prefect

Prefect, like Airflow, is also a modern data orchestration tool to schedule, automate, and execute data workflows, so data teams don’t have to manually run scripts, wait for pipeline changes.

Components of Prefect

Components | Description |

|---|---|

Flow | Business workflows comprising tasks. |

Task | A single task in the workflow |

Agents | Task executor, which runs the task in cloud or on-prem, wherever data lives. |

Deployment | Defines scheduling, parameters, infrastructure for execution. |

Orchestration layer | Control layer that manages logs, schedules, failures, retries, and more. |

State | Status tracker of every task and what happened with it. |

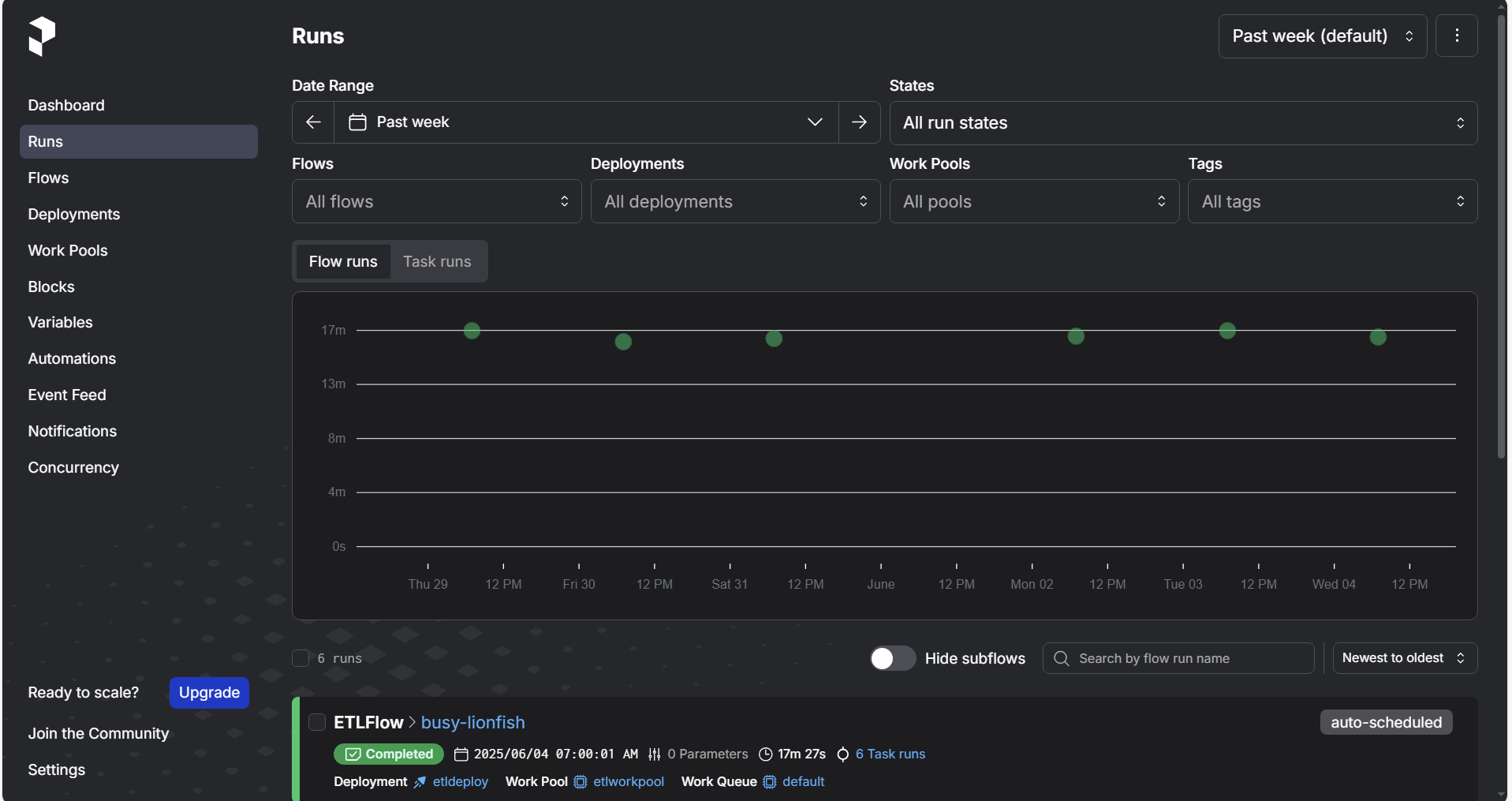

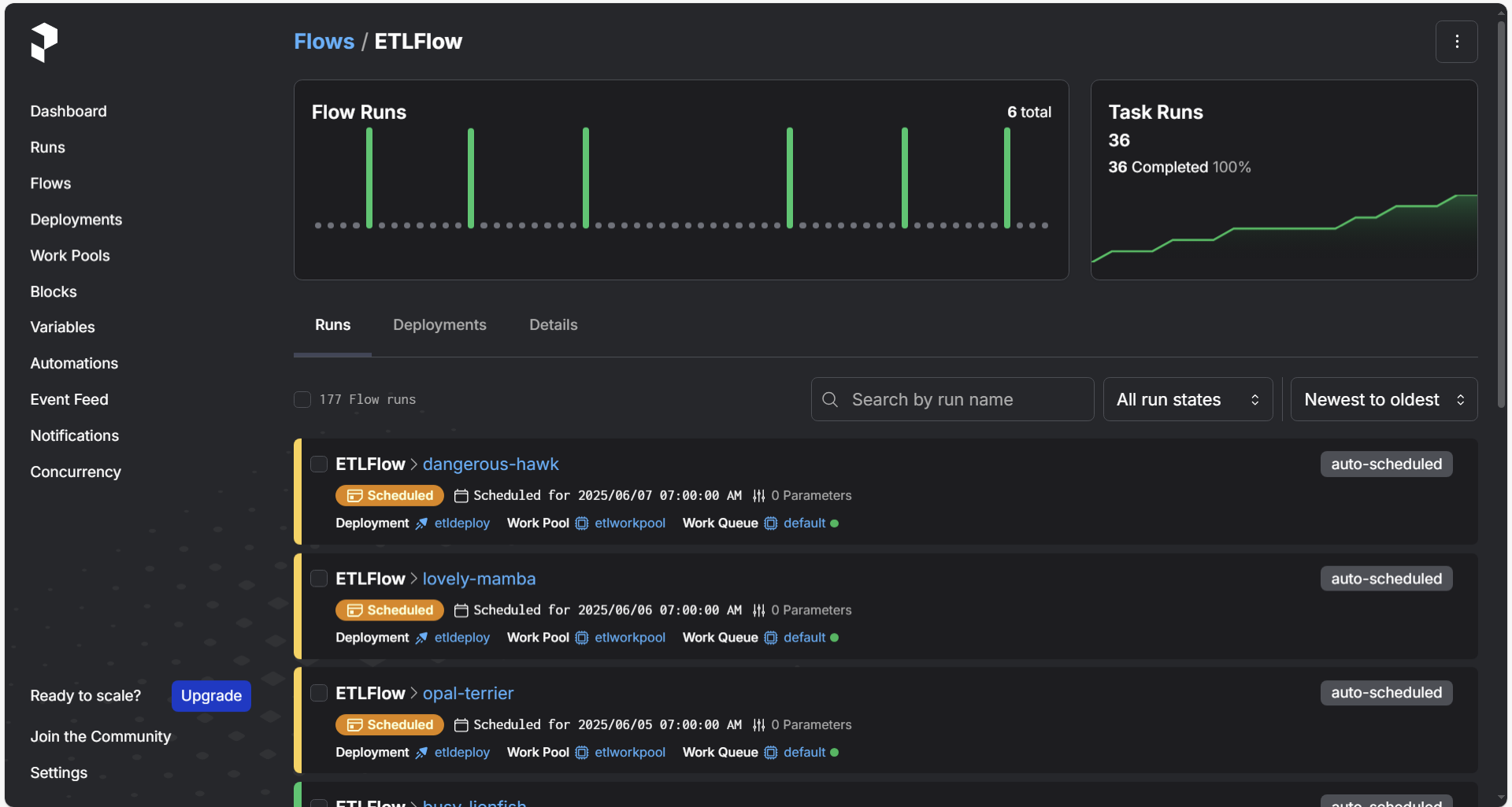

Flow and task are regular Python functions in Prefect and DAG is built dynamically at runtime. You can run Prefect workflows locally, in Docker, on Kubernetes, or via Prefect Cloud, and you don’t have to manage schedulers or servers if you use Prefect Cloud.

How does Prefect work?

Install the CLI.

Imagine any series of business task, like pulling data from CRM and updating dashboards at regular intervals. Prefect combines all these separate tasks into one 'flow’.

Write the flow on Prefect with @flow and @task.

Create the schedule (daily, hourly, etc) at which it must operate.

Triggers tasks automatically on set duration.

Regular monitoring of tasks and re-running if it fails.

If Airflow is like a traditional operations manager with a clipboard, Prefect is a smart, digital manager that accomplishes everything automatically while keeping everyone on loop.

Prefect is more developer-friendly and has a flexible scheduling model. You can modify, pause, or delete a schedule anytime from the UI or CLI without touching the flow code, making experimentation and temporary runs extremely easy. - Senior data engineer, datakulture

Limitations of Airflow & Prefect

While both tools are good in their own way, there are some limitations too.

Airflow

Static DAGs. Complex for dynamic workflows.

Poor support for event-based & on-demand workflow execution.

No native support for Windows. Need to use Docker or WSL (Windows Subsystem for Linux).

Schedules and DAG code are heavily interdependent. Any change in scheduling requires modifying and redeploying the DAG, which can be strenuous and time consuming for many.

Prefect

Ecosystem and plugin support need to improve.

Need to do code rewriting for migration from Prefect 1 to 2.

If you want advanced features like centralized monitoring or autoscaling, that needs to happen through Prefect Cloud, which may not suit all self-hosting or privacy needs.

Final verdict

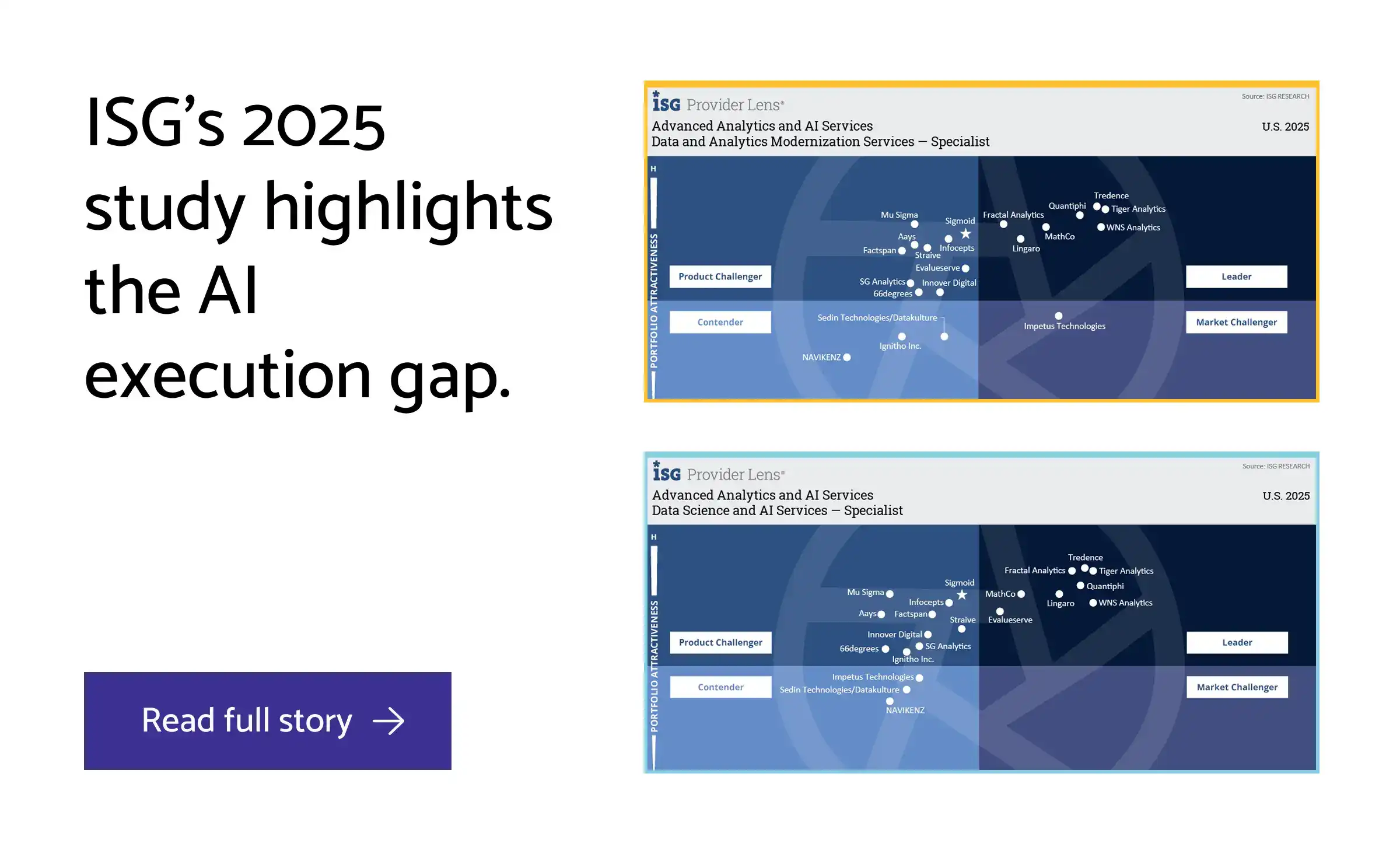

Choosing Airflow or Prefect depends on your company’s goals, team strength, existing priorities, and many other factors. Here’s a little decision matrix for Prefect vs Airflow, that can help you decide better.

Factors | Airflow | Prefect |

|---|---|---|

Initial setup | ❌ Needs scheduler, metadata DB, web server, workers & complex to set up. | ✅ Easy to setup. If you look for low infra setup and cost, Prefect cloud will be the best choice. |

Deployment overhead | ❌ In need of infra, CI/CD for DAG versioning, DevOps. | ✅ Frictionless deployment and the need for DevOps is much less. |

Scalability | ✅ Highly scalable, but needs ops investment | ✅ Same like Airflow, but for cloud-native set up |

Community support | ✅ Well-matured community and support from trained experts. | ❌ Not big enough like Airflow but Slack community growing fast. |

Extensibility | ✅ Supported widely. | ❌ Custom integrations possible with Python. But, right now, it’s a small ecosystem. |

Use case fit | ✅ Great for mature and large data teams that have legacy systems, tight controls, and internal infra team | ✅ Suitable for cross-functional teams in need of multi-dimensional analytics. |

If you value stability, a mature ecosystem, and time-based scheduling, Airflow remains a strong choice. If flexibility, developer experience, native Windows support, and modern architecture matter more, Prefect might be a better fit.

One of our clients was looking for a data orchestration that works well with their on-prem databases, but also taps into their cloud data sources like GA, Meta, Klavio, etc. They also wanted to prioritize faster execution with dev-friendly workflows, without infra complications and delays. We suggested Prefect for this as it suited the use case more.

The team wanted to build an extensive marketing analytics solution, which will cut down their manual jobs and ad hoc executions and make real-time data available for their business teams.

API calls to collect data from cloud sources like Google Analytics API, Facebook Ads API, and Klaviyo email metrics.

Database pipelines powered by Prefect (on-premises version) to tap into core data in PostgreSQL, run transformations, and make data reporting ready.

Custom schedules for hourly, daily, and weekly refreshes.

Whenever there is a need for an ad-hoc flow, their team could do it via Prefect UI without writing a line of code.

Email notifications enabled to let the team know when their attention is required.

The allowed their team to generate reports on demand, reducing lots of manual work.

If you need similar help around Prefect, Airflow, or any other data engineering tools, do let us know. We can have a quick discovery call to discuss and see how we can work together.

by Kannabiran

Kannabiran, a senior and Lead Data Engineer at datakulture, has led the strategy and delivery of cost-effective, efficient data infrastructures for companies across industries. A strong advocate of metadata-driven architecture, he brings deep knowledge of modern data engineering concepts and tools, and actively shares this expertise through blogs, videos, and community conversations. He is also the driving force behind building our novel ETL framework, while also guiding and nurturing young data engineers and interns.