Feature engineering - Everything you need to know

Feature engineering plays an important role in machine learning model building. Read what our ML experts have to say about the steps involved in this process, its importance, benefits, and more - all with real-world examples.

Thulasi

Dec 4, 2025 |

12 mins

What is feature engineering?

When you look for 'how to build ML model', feature engineering stays on top, as it is one of the initial processes that can make or break results. Feature engineering is where a data scientist carefully selects, transforms, and creates features from raw data to improve the model’s performance. It can be compared to how a chef meticulously selects, washes, and chops ingredients to enhance the taste and presentation of the final dish.

One quick example to understand how feature and feature engineering works.

Let’s say that you are building a model to predict house pricing in a city. You will not only use the rows of data about the house’s size, number of rooms, etc. You will also try crafting new features such as proximity to schools, crime rates, and historical price trends, etc., ensuring the model captures the most relevant information for accurate predictions.

The example clearly shows how the choice of features shape a model’s performance and accuracy.

Step-by-step process involved in feature engineering

The multi-faceted process many data consulting companies follow involves the steps below.

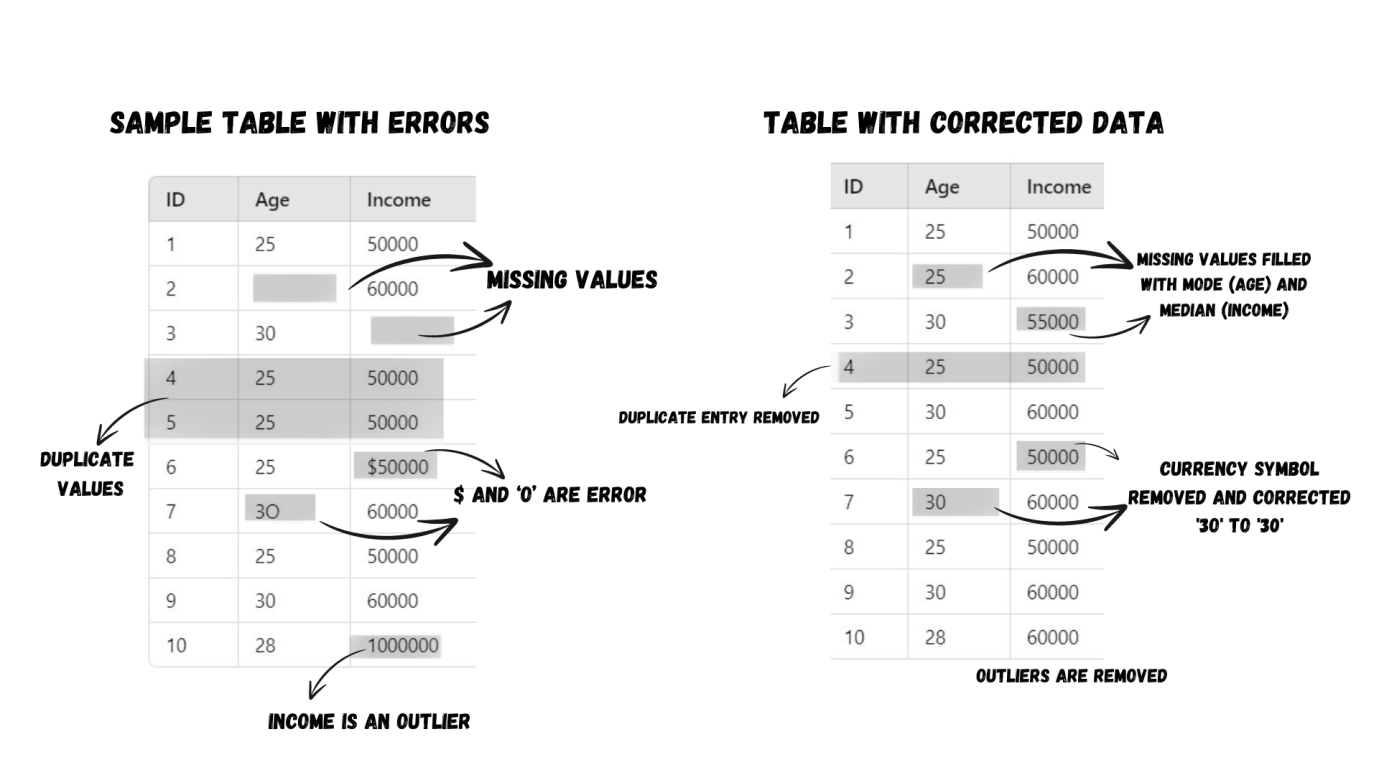

Data cleansing

Like how the chef washes and cleans ingredients before cooking, the dataset must be cleansed to remove inconsistencies and flaws in them. This is the groundwork needed for a reliable and productive data analysis.

Some data cleansing techniques are:

Handling missing values

A missing ingredient could ruin a dish. Likewise, missing values in datasets might affect a model's performance. In such cases, you could,

Impute the value, like substituting a missing ingredient with a similar one.

Deletion of the row/column with the missing value, like how you remove a problematic ingredient altogether.

Removing duplicates

Adding the same ingredient twice could throw off the balance of a recipe. Duplicate entries in datasets can distort statistical analyses. For a unique and accurate dataset, identifying and eliminating duplicates is a prerogative.

Error correction

Data scientists must correct errors and typos in data and ensure consistency in formatting. You don’t want your dish to be too salty or savory due to an incorrect measurement, do you?

Outlier handling

A pastry doesn't need a spicy pepper or pies and onions. For accurate modeling, detecting and removing outliers is required.

Look at an example below, that shows an example for all the three data cleaning techniques.

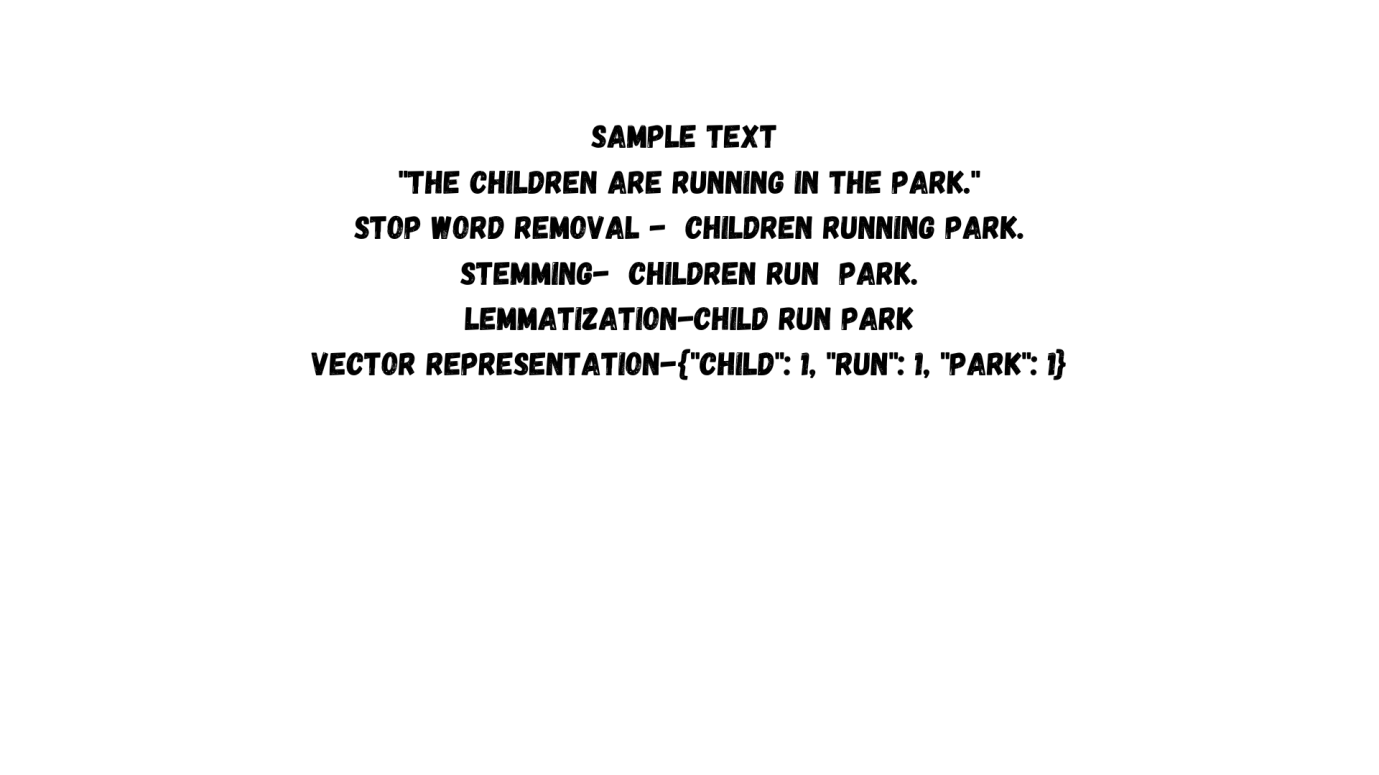

This is the process of skimming and trimming off unnecessary text data like how you clean and remove the unwanted portions of your vegetables or meat. One more process is stop words removal where you cut down words like "the" and "and" that don’t add much meaning.

There is also stemming and lemmatization, where you standardize data across the entire datasets for clarity. Example: referring to all forms of "run" (running, ran) as "run.

And finally vectorization, where you transform text into numerical vectors, enabling machine learning models to process and derive insights effectively. Let’s take a sample text and perform these preprocessing operations on that.

Data transformation

Just as you chop and spice ingredients to get the perfect flavors, data transformation in machine learning is all about preparing raw data for optimal model learning and analysis.

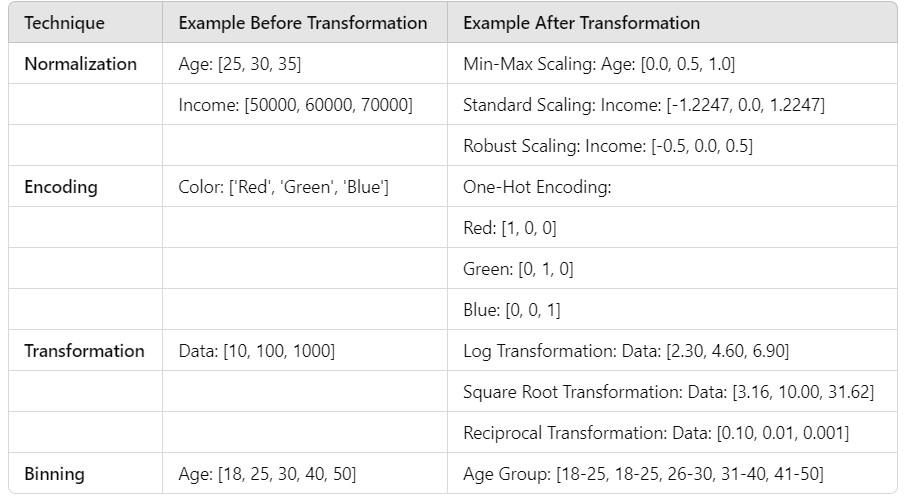

Normalization

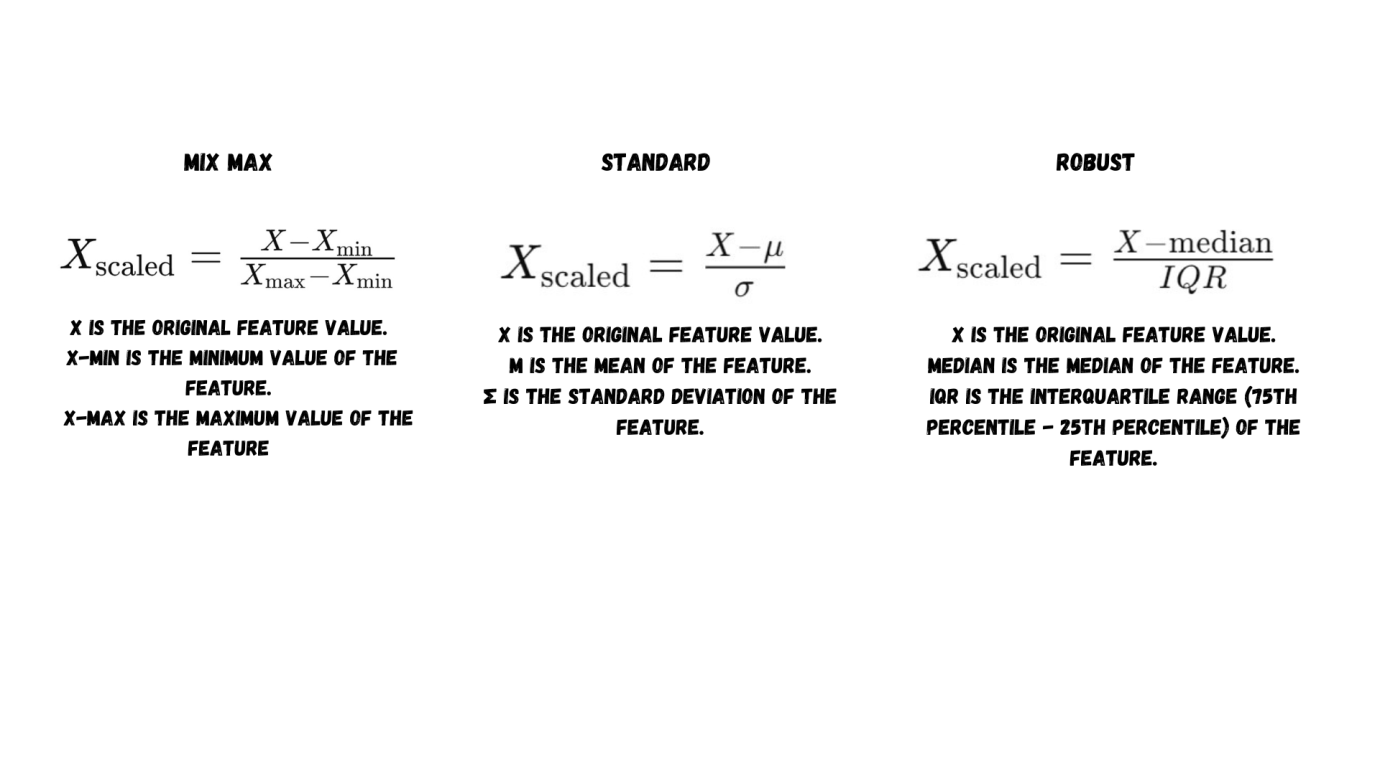

Extreme values in datasets can overpower the dish. Min-Max Scaling sets ingredients to a specific range. Standard Scaling ensures all ingredients (data) are equally weighted, similar to ensuring each spice contributes equally to the overall flavor profile. Robust Scaling, handling outliers like potent spices, ensures that extreme values don’t overpower the dish, maintaining a balanced taste.

Encoding

What if the ingredients are in local names that a chef can’t understand? In the Machine Learning world, encoding and converting categorical variables into form models. This is because not every algorithm is good at handling categorical data. There are two types.

Integer or label encoding, where every value is assigned an integer. Example: Pizza is 1, pasta is 2, and bruschetta is 3 and one-Hot Encoding where you create distinct categories and assign binary values to them.

Transformation

Here, you alter the data distribution and adjust data characteristics for smooth model interpretation, similar to refining cooking techniques to improve texture and taste. Some transformation techniques include logarithmic, Square Root, and reciprocal transformations.

Binning

Have you ever organized ingredients by flavor or taste before cooking? Binning is exactly like that where you categorize variables like age ranges to ensure each group contributes meaningfully to the final model.

Feature extraction

This is where the real cooking happens. Here, you extract suitable and most relevant features from raw datasets to build effective models. Here is how feature extraction works for text data analysis.

Feature extraction for text data

Bag-of-words representation: Like how you count the number of meat or vegetable pieces you add to a soup, you count the frequency of words here. This is to simplify text analysis, knowing the importance of each word used.

TF-IDF vectorization: A pinch of salt and a dollop of cream isn’t the same. TF-IDF (Term Frequency-Inverse Document Frequency) assigns a weight to each word based on its frequency and importance in a group of documents.

Word embeddings: Some ingredients complement each other and some don’t. To understand how words react to each other, this extraction method assumes them as vectors in high-dimensional spaces. The assumption helps you grasp the relationship between words, so you could extract the best features—something like tomato and basil.

Feature extraction for image data

Edge detection: Chopping vegetables finely is a skill. Likewise, edge detection is to identify the edges and boundaries in images, extracting important visual features.

Feature detection: Can you identify key ingredients of a soup just by having a sip? Feature detection involves something similar—recognizing and highlighting specific elements in images, crucial for classification and object detection.

Convolutional Neural Networks (CNNs): Deep learning models utilize techniques like CNNs for image identification and classification. extract hierarchical features from images, recognizing intricate patterns and objects of an image, like how a trained chef sniffs out the perfect balance between ingredients.

Feature extraction for time series data

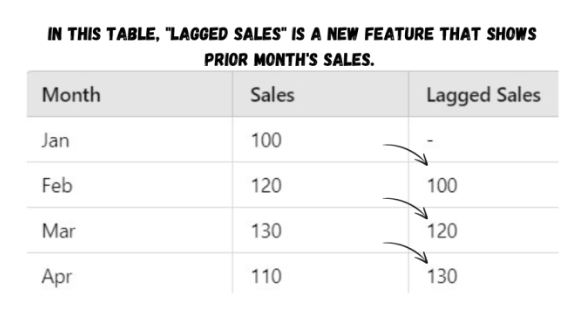

Lag features: Like how you trace back a few steps to recollect what you added, lag features incorporate past values as features to see if there are temporal dependencies.

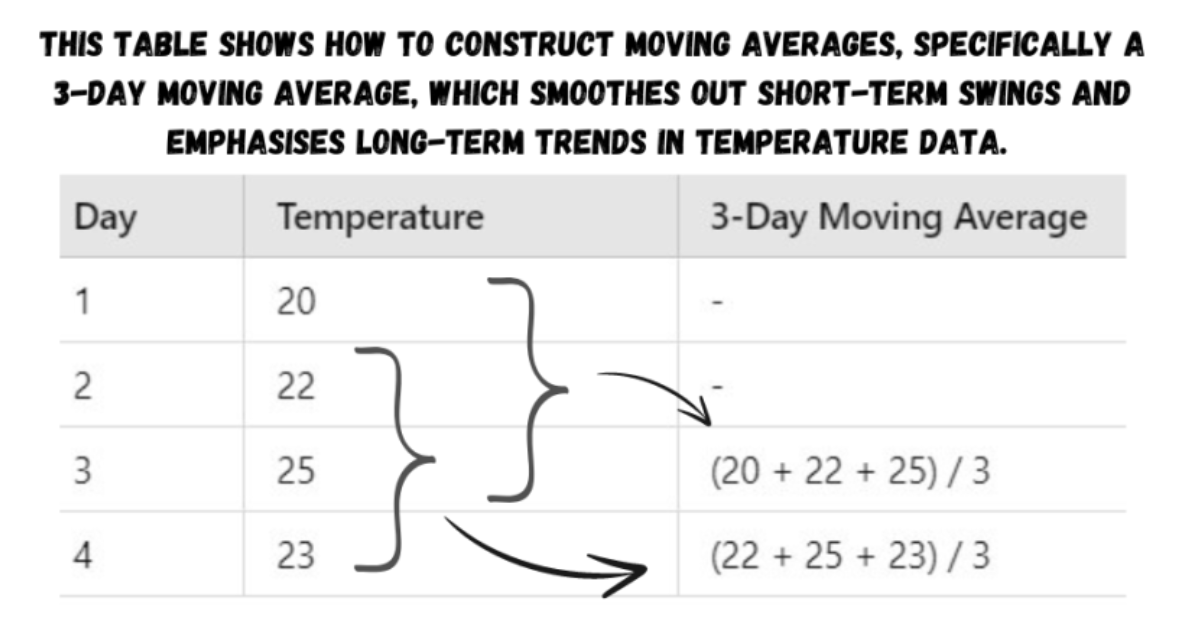

A perfectly balanced heat brings out even cooking. In time series data, you will often have to smooth out fluctuations using moving averages. This will even out the data and reveal underlying trends.

Time series decomposition techniques: MasterChefs often decompose a dish into ingredients, base, and seasoning to see how each one contributes to the dish. Similarly, time series decomposition breaks down data into trend, seasonality, and residual components, enhancing analysis.

Principal component analysis

Like how you boil a sauce to bring out its essential flavors, PCA involves dimensionality reduction of data. By projecting high-dimensional data onto a low-dimensional space to simplify a large data set into a smaller one—still keeping the important sauces of the data. This way, it’s much easier to explore, visualize, and handle while preventing issues like overfitting.

Feature selection

You don’t need your entire pantry in their entirety to prepare a dish. Feature selection involves finding and picking the best feature for the model.

Filter method - Finding features that correlate the most with the dependent variables. It involves statistical measures, such as correlation coefficients, to determine which features contribute most to the model's predictive power.

Wrapper method - training the model on subsets of features iteratively and identifying the performance of the model for each feature. Based on how it performs, features are added or removed, like how you experiment while cooking, altering ingredients and quantities.

Embedded method - Combines the negatives of both the above methods. It is used to select the best feature subsets that not only improve the model performance but also streamline computational efficiency and prevent issues like model overfitting.

This is also where understanding what is sparse matrix becomes valuable — since models often need to choose the most informative features from high-dimensional, sparse datasets, eliminating redundant or zero-heavy attributes. Techniques like encoding categorical variables or using TF-IDF on text often produce sparse matrices — a natural byproduct of feature creation when most data points contain zeros.

Feature creation

The complexity and flavor of a dish depends on the chosen ingredients. Similarly, creating the best feature involves generating new data attributes which altogether improves the predictive capabilities of the ML model.

You can achieve this through the following methods.

Domain-specific creation: Like creating a dish to suit dietary and cultural preferences, domain-specific feature creation aligned with business metrics.

Data-driven creation: A cooking exploration with diverse ingredients can sometimes lead to amazing and flavorful dishes. Exploring within datasets could also lead to identification of meaningful patterns and novel attributes. Example: calculating aggregations (like mean, sum, or count) or creating interaction features.

Synthetic creation: ever treated your tastebuds with a unique experience, blending two different flavors together? Binding existing features could have a similar effect over the model’s performance, synthesizing new data points.

Feature iteration

Chefs often refine the dish's taste based on the results and external feedback. Similarly, data scientists enhance features based on how the model performs. Through iterative training and evaluation, they create or integrate new features while discarding the unnecessary ones. This is how iteration plays a role in creating high-performing, accurate models.

Feature split

Notice how chefs chop vegetables and herbs finely for higher absorption? Sometimes splitting a feature and breaking it down to sub-features can have a similar effect. The step uncovers more nuanced factors, correlations, and patterns that could often go unnoticed if considered as a single entity.

Feature engineering tools

In the culinary arts, there are numerous specialized instruments available to make cooking easier and more efficient, each tailored for a certain task and preference. Just as chefs use blenders for smoothies and knives for precision cutting, machine learning hobbyists have a variety of tools for feature engineering.

Featuretools: Be any kind of data source like spreadsheets or databases, Featuretools extract intricate patterns and features from them. Just like a seasoned cook extracting the best out of used ingredients.

TPOT: No matter how large your dataset is, TPOT, like a true culinary scientist, could find the optimal settings for ML development.

DataRobot: Comes with advanced capabilities to improve model performance, by evaluating each feature and its contribution to results.

Alteryx: This is like a well-equipped dream kitchen, allowing data scientists to explore and visualize any complex workflows for data preparation and transformation - all without coding.

H2O.ai: Again, another tool that could be compared to a culinary lab, that allows data scientists to prep data and conduct ML experiments focused on high precision and flexibility.

Conclusion

Feature engineering is like the magic touch that takes machine learning models from good to outstanding, just as a chef transforms ingredients into a culinary masterpiece. It's all about refining raw data into insightful features that power accurate predictions. Imagine carefully selecting, slicing, and seasoning ingredients to create a dish that delights the palate. Similarly, data scientists meticulously clean and transform data, creating new relationships and leveraging advanced techniques to bring out hidden flavors and patterns, while building AI strategy for businesses. Perfecting feature engineering isn't just about improving algorithms; it's about tuning the true potential of data to get desired results and deliver a more utilitarian model that perfectly ticks the user requirements.

Unlock better accuracy and insights with our data science services.